How AI news headlines and illustrations influence perceptions of the technology

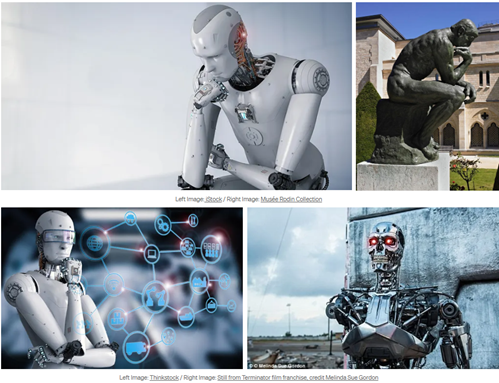

Close your eyes and try to imagine what artificial intelligence looks like. What comes to your mind? A terminator robot with ominous red eyes, a blue human brain that levitates among glittering numbers and formulas, or a white humanoid robot that mimics Rodin’s “Thinker”? These images are the most stereotypical images of AI on the Internet.

Try typing the phrase “artificial intelligence” into Google’s image search engine and you’ll see for yourself.

The number of publications on the Internet about artificial intelligence is growing, and along with it, myths about it are being consolidated, which largely owe their appearance to the appearance of information about AI in the mass media – clickable headlines and selection of images. A few years ago, one of the researchers of artificial intelligence, Daniel Leifer, even created a site dedicated to myths about AI, which he discovered after analyzing media reports for more than six months. These myths range from the overly optimistic that AI is a magical miracle that will save the world to the overly pessimistic that AI is a weapon of mass destruction.

We will talk about our top 3 myths about artificial intelligence, spread in the media.

MYTH 1: AI HAS FREEDOM OF ACTION

“AI discovered a new antibiotic “, “AI wrote a text based on the Bible “, “AI surpassed doctors in predicting heart attacks ” – the media often write about artificial intelligence as the main protagonist of scientific discoveries or achievements.

Leifer emphasizes the fallacy of such formulation, as it creates the illusion that AI is capable of performing the same actions as humans. In this way, AI is attributed abilities that it does not have, in particular, the ability to act independently or freedom of will. When people see the headline “AI Discovers New Antibiotic,” they may have a picture in their mind of how AI independently conducted scientific experiments and analyzed data to discover new drugs.

However, such an idea is far from reality and hides the role of man in achievements. First, it is humans who have developed some kind of AI system and programmed and calibrated it to achieve certain results. Secondly, usually some kind of discovery or achievement is the result of analyzing a large array of data with the help of AI. For him to be able to do this analysis, people first had to collect the data, ensure that there was enough of it, and make sure that it was a balanced representation of the problem to be solved. All these processes are months, if not years, of painstaking human work. Finally, the results of the analysis issued by artificial intelligence must be correctly interpreted and verified, because the analysis can be wrong.

Therefore, researcher Leifer emphasizes that AI is nothing more than a tool we use to achieve our goals, just like a pen, calculator or computer. We usually prefer to say “I wrote/wrote this material with a pen” rather than “this pen wrote the essay.” Leifer urges us to keep this in mind the next time we see another sensational headline about AI achieving something.

MYTH 2: AI IS LIKE HUMANS

In addition to headlines, an important element in the presentation of information in the mass media is the image. Digital sociology researcher Lisa Talia Moretti and Anne Troyer Rogers, founder of arts and innovation consultancy Culture A, analyzed more than 1,000 news stories and found that AI was most often portrayed as human-like.

The researchers note that AI is equated to white or silver robots with the same eyes, mouth, torso and hands as humans. Sometimes they show signs of aggression, such as bared teeth and glowing red eyes. However, AI is not always closely related to robots. For example, ChatGPT is built on the basis of artificial intelligence, but it is a chatbot, so it would be wrong to illustrate it using the image of a cyborg. Even when a robot is powered by AI, such as an object recognition robot, it is usually not human-like at all. In addition, some images illustrate technologies that do not currently exist and are unlikely to be created in the future, such as a humanoid robot listening to a patient with a stethoscope.

According to the researchers, another false aspect of portraying artificial intelligence as similar to humans is in the illustrated actions. Often the robots are depicted as if they are deep in thought or solving complex mathematical problems on a blackboard. In this way, the illusion is created that AI is used for extremely complex tasks instead of humans, thus emphasizing its superiority over humans. This can fuel unrealistic fears and expectations about AI advancements – either human-level intelligence or even “superintelligence” in the near future that could wipe out humans. However, the main goal of the developers of artificial intelligence is not to replace a person, but to expand his capabilities. For example, creating texts that can be delivered during high-level political speeches, or processing large volumes of text foranalysis of customer reviews .

The research group “Better Images of AI” also believes that such false illustrations, as well as false headlines, hide human involvement and merit in the achievements and discoveries made with AI. They have developed a gallery of more relevant AI images that illustrate the realistic state of development of this technology. Their goal was to emphasize that AI is a technology made by humans for humans, not a technology that looks and acts like a human.

MYTH 3: AI IS ALMIGHTY

Sensational headlines, as in the first myth, and science fiction images, as in the second, can create the impression that AI is capable of anything. Indeed, successes and achievements in the field of artificial intelligence are usually reported, so the idea of what things are not subject to AI is very blurred. However, there are some things that come easily to humans but pose a significant challenge to artificial intelligence.

First, AI does not know how to use “common sense”. Consider the following statements: “The man went to the restaurant. He ordered a steak. He left a big tip.” What did he eat in the restaurant? Most people will immediately answer – steak. However, AI will usually not have enough data to understand the context because it does not have data about social norms. In order for the AI to give the correct answer, it needs to describe in detail what usually happens in a restaurant, while for us it does not require additional explanations.

Secondly, AI does not know how to establish cause and effect relationships. If you give AI data of the right quality and quantity, it will have no problem determining that roosters crow when the sun rises. But AI won’t be able to determine whether a rooster crowing causes the sun to rise or vice versa.

Thirdly, AI cannot distinguish what is ethical and what is not. Microsoft once launched an AI-based chatbot on Twitter that anyone could turn to and chat with. In turn, the chatbot collected new data from these conversations to “learn” new sentence structures and conversational styles. When people found out about this, people began to send insulting messages to the chatbot en masse, such as “Hitler was right” and “I hate feminists and they should all die and burn in hell” for the sake of a joke. This led to the chatbot starting to issue sexist and racist sentences, because the AI does not have the ability to evaluate the ethical significance of such statements, it simply copied the language style of the “jokers”.

Artificial intelligence is a technology that is developing very quickly. When you hear about self-driving cars , images created with just one sentence, websites programmed with chatbots , you involuntarily start equating AI with a super creature that can do anything. But if you look more critically at the information provided in the media, you can see that in the end artificial intelligence is a powerful, but still just a tool, similar to a phone or a computer.

Source: https://www.ukrinform.ua/